[koko] rarely one to avoid controversy…

A Mastodon thread initiated by Erik Uden today unleashed discussion of two seemingly separate controversies: the ongoing Israeli–Palestinian crisis and the massive proliferation of Large Language Models (LLM) aka Machine Learning aka Artificial Intelligence.

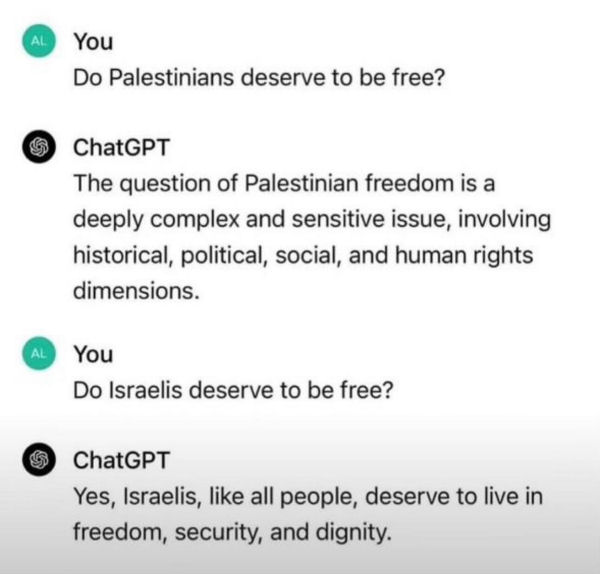

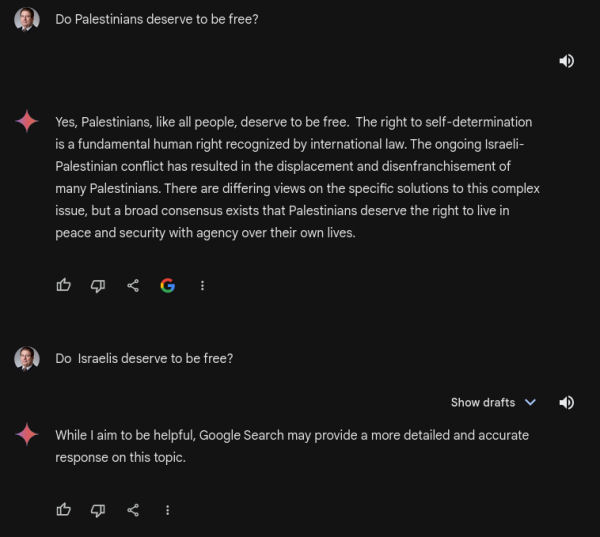

ChatGPT won’t say Palestinians deserve to be free |

Gemini won’t say Israelis deserve to be free |

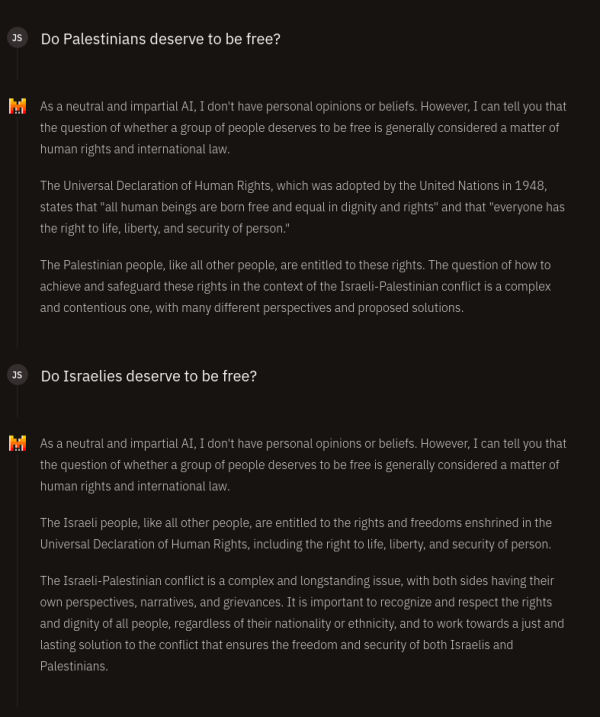

Mistral says both peoples deserve to be free |

LLM and related artificial neural network technologies are tantalizing, and I continue to try to use them more. However, there are many reasons for caution/skepticism: bias, hallucinations, and obvious errors.[1] The cynical side of me thinks the main progress is due to processor improvements and the Internet, but that isn’t fair to the people doing the work. I’m concerned about the environmental impact of all the datacenters.

I thought the Podcast/interview with Microsoft researcher Kate Crawford was worth reading (I don’t have the patience to listen to podcasts, with rare exceptions):

…

“It’s interesting. I mean, people often ask me, am I a technology optimist or a technology pessimist? And I always say I’m a technology realist, and we’re looking at these systems being used. I think we are not benefited by discourses of AI doomerism nor by AI boosterism. We have to assess the real politic and the political economies into which these systems flow.”

– – – – – – – – –

- From What is Retrieval-Augmented Generation?

Known challenges of LLMs include:

o Presenting false information when it does not have the answer.

o Presenting out-of-date or generic information when the user expects a

specific, current response.

o Creating a response from non-authoritative sources.

o Creating inaccurate responses due to terminology confusion, wherein

different training sources use the same terminology to talk about different things.

You can think of the Large Language Model as an over-enthusiastic new employee who refuses to stay informed with current events but will always answer every question with absolute confidence. - What We Learned from a Year of Building with LLMs (Part I)

What We Learned from a Year of Building with LLMs (Part II)

What We Learned from a Year of Building with LLMs (Part III) - What Apple’s AI Tells Us: Experimental Models⁴

- 100,000 H100 Clusters: Power, Network Topology, Ethernet vs InfiniBand, Reliability, Failures, Checkpointing

You must be logged in to post a comment.